by Gilles Teniou (Tencent), Emmanuel Thomas (Xiaomi), Thomas Stockhammer (Qualcomm)

First published June 2024, in Highlights Issue 08

Over the past few years, popular applications have made Augmented Reality (AR) experiences common for smartphone users. At the same time, there has been the emergence of a wide range of AR capable devices beyond smartphones - such as AR Glasses and Mixed Reality Head-Mounted Displays (HMD).

For service providers and application developers, addressing this wide spectrum of devices and their capabilities represents a challenge that could slow down the wider deployment of AR services. 3GPP TS 26.119 [1] specification aims at creating a higher degree of interoperability between application providers, content creator and manufacturers by consolidating the media capabilities of AR-capable devices in handful of well-defined device categories.

The XR baseline terminal architecture

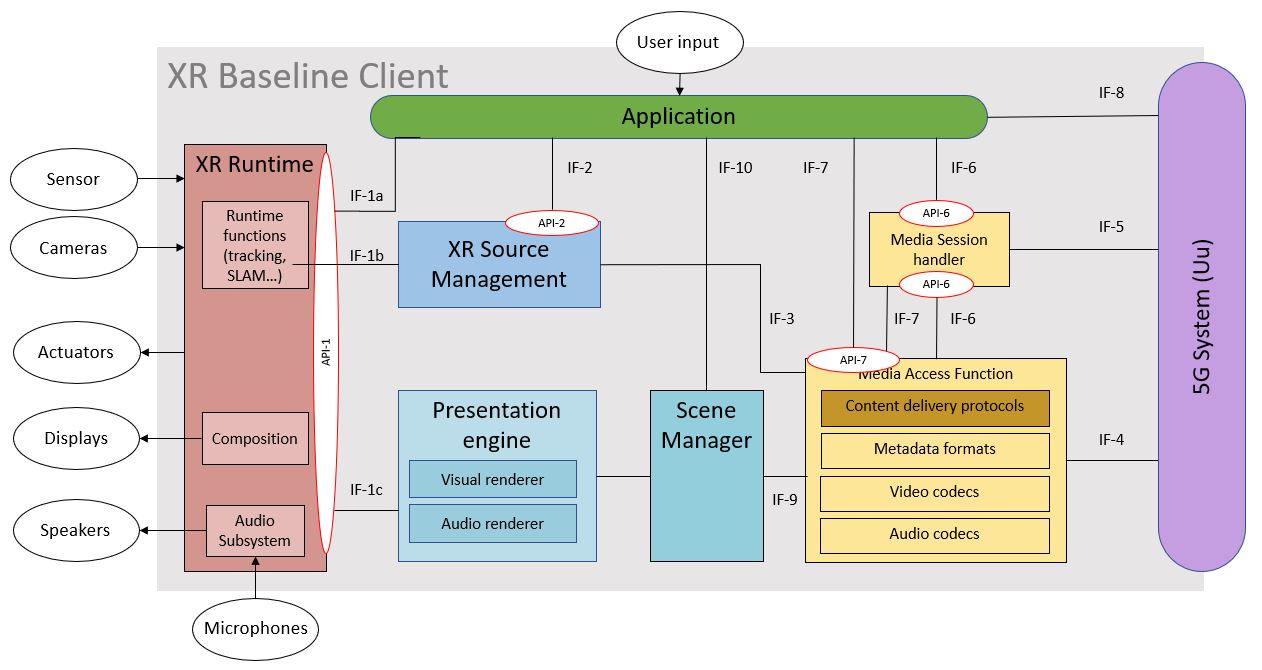

Because AR is considered as one modality on the XR (eXtended Reality) spectrum, and to ensure consistency across the different AR-capable device categories, 3GPP TS 26.119 specifies an XR client in UE supporting a common architecture called the XR Baseline client, shown on Figure 1.

Figure 1 - XR Baseline terminal architecture and interfaces

This XR client in UE terminal architecture developed for AR but also suitable any XR experience is inline with industry standards such as the OpenXR specification [2] defined by the Khronos group which addresses jointly AR, MR (Mixed Reality) and VR (Virtual Reality) experiences. At the top of the architecture sits the Application which is responsible for orchestrating the various components to provide the AR experience.

It is worthwhile highlighting three parts of XR Baseline terminal architecture here:

- The XR Runtime is responsible for the final audio and visual rendering based on the views supplied by the Presentation Engine. The XR Runtime is typically defined by XR industry framework such as OpenXR.

- The XR Runtime also exposes sensor data such as the pose prediction which can be retrieved and packaged before transport by the XR Source Management.

- The Media Access Function is the media powerhouse to process the delivered scene description and to decompress the audio and video data received via the 5G System which composes the immersive

Device categories and capabilities

Based on the XR client in UE terminal architecture shown in Figure 1, TS 26.119 defines four categories of devices targeting various types of form factors. These are Thin AR glasses, AR glasses, XR phone and XR Head Mounted Display (HMD). The thin AR glasses device type represents power-constrained devices (typically fashionable form factors) and with limited computing power, as opposed to the AR glasses which have higher computing power.

The XR phone device type covers smartphones with capabilities and resources sufficient to offer AR experiences.

Lastly, the XR HMD device type corresponds to HMDs at least capable of offering AR experiences but not precluding other types of XR experiences.

For each device type, TS 26.119 specifies the mandatory and optional media capabilities to be supported by the UE. Those media capabilities pertain to audio, video, scene processing and XR systems capabilities and are defined in terms of supports of audio codecs (EVS [3], IVAS [4] and AAC-ELDv2 [5]), video codecs (AVC [6] and HEVC[7]), scene description formats (glTF 2.0 [8] and its extension in MPEG-I Scene Description [9]).

Regarding metadata, common metadata are defined in TS 26.119 that are predicted pose information, the action object representing actions performed by a user of an AR application and the available visualization space object representing a 3D space within the user’s real-word space that is suitable for rendering virtual objects.

Other related specifications

TS 26.119 has been designed with the intent to serve as a common platform to build upon specifications that address application and services. In this spirit, the 3GPP TSG SA WG4 (SA4) has been concurrently developing two specifications which refer to TS 26.119:

- TS 26.565 [10] on Split Rendering Media Service Enabler

- TS 26.264 [11] on IMS-based AR Real-Time Communication.

Those two specifications rely on the defined capabilities in TS 26.119, but also extend them for the specific need of their targeted application and service.

Media capabilities for AR are expected to be maintained by 3GPP SA4 to align with any market evolution and the development of new devices.

For more on WG SA4: www.3gpp.org/3gpp-groups

References

- 3GPP TS 26.119: “Device Media Capabilities for Augmented Reality Services”.

- The OpenXR™ Specification 1.0, The Khronos® OpenXR Working Group.

- 3GPP TS 26.441: "Codec for Enhanced Voice Services (EVS); General Overview".

- 3GPP TS 26.250: "Codec for Immersive Voice and Audio Services (IVAS); General overview".

- 3GPP TS 26.401: "General audio codec audio processing functions; Enhanced aacPlus general audio codec; General description".

- ITU-T Recommendation H.264 (08/2021): "Advanced video coding for generic audiovisual services".

- ITU-T Recommendation H.265 (08/2021): "High efficiency video coding".

- ISO/IEC 12113:2022 Information technology Runtime 3D asset delivery format Khronos glTF™2.0.

- ISO/IEC 23090-14:2023 Information technology Coded representation of immersive media Part 14: Scene description.

- 3GPP TS 26.565: “Split Rendering Media Service Enabler”.

- 3GPP TS 26. 264: “IMS-based AR Real-Time Communication”.

Technology

Technology